Scientists at Meta have used artificial intelligence (AI) and noninvasive brain scans to unravel how thoughts are translated into typed sentences, two new studies show.

In one study, scientists developed an AI model that decoded brain signals to reproduce sentences typed by volunteers. In the second study, the same researchers used AI to map how the brain actually produces language, turning thoughts into typed sentences.

The findings could one day support a noninvasive brain-computer interface that could help people with brain lesions or injuries to communicate, the scientists said.

“This was a real step in decoding, especially with noninvasive decoding,” Alexander Huth, a computational neuroscientist at the University of Texas at Austin who was not involved in the research, told Live Science.

Related: AI ‘brain decoder’ can read a person’s thoughts with just a quick brain scan and almost no training

Brain-computer interfaces that use similar decoding techniques have been implanted in the brains of people who have lost the ability to communicate, but the new studies could support a potential path to wearable devices.

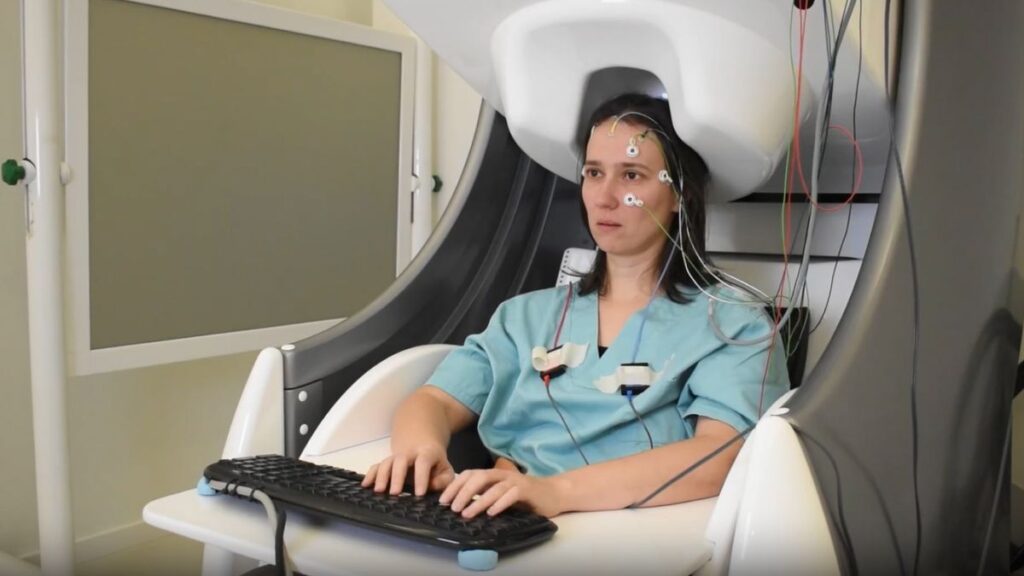

In the first study, the researchers used a technique called magnetoencephalography (MEG), which measures the magnetic field created by electrical impulses in the brain, to track neural activity while participants typed sentences. Then, they trained an AI language model to decode the brain signals and reproduce the sentences from the MEG data.

The model decoded the letters that participants typed with 68% accuracy. Frequently occurring letters were decoded correctly more often, while less-common letters, like Z and K, came with higher error rates. When the model made mistakes, it tended to substitute characters that were physically close to the target letter on a QWERTY keyboard, suggesting that the model uses motor signals from the brain to predict which letter a participant typed.

The team’s second study built on these results to show how language is produced in the brain while a person types. The scientists collected 1,000 MEG snapshots per second as each participant typed a few sentences. From these snapshots, they decoded the different phases of sentence production.

Decoding your thoughts with AI

They found that the brain first generates information about the context and meaning of the sentence, and then produces increasingly granular representations of each word, syllable and letter as the participant types.

“These results confirm the long-standing predictions that language production requires a hierarchical decomposition of sentence meaning into progressively smaller units that ultimately control motor actions,” the authors wrote in the study.

To prevent the representation of one word or letter from interfering with the next, the brain uses a “dynamic neural code” to keep them separate, the team found. This code constantly shifts where each piece of information is represented in the language-producing parts of the brain.

That lets the brain link successive letters, syllables, and words while maintaining information about each over longer periods of time. However, the MEG experiments were not able to pinpoint exactly where in those brain regions each of these representations of language arises.

Taken together, these two studies, which have not been peer-reviewed yet, could help scientists design noninvasive devices that could improve communication in people who have lost the ability to speak.

Although the current setup is too bulky and too sensitive to work properly outside a controlled lab environment, advances in MEG technology may open the door to future wearable devices, the researchers wrote.

“I think they’re really at the cutting edge of methods here,” Huth said. “They are definitely doing as much as we can do with current technology in terms of what they can pull out of these signals.”